Generative AI (e.g., ChatGPT, Gemini) is increasingly becoming part of children’s digital lives. We are seeing a new world of personalized learning apps, AI-assisted storytelling platforms, and creative tools that can turn an idea into a song, drawing, or interactive game. On the surface, the promise is compelling. New technologies can adapt to a child’s needs, help with creative expression, and offer new learning experiences for every individual child. Yet GenAI usage also raises important questions. If an AI tool can give a polished answer right away, how do children grow and wrestle with a problem? When a story, poem, or image can be summoned from a single prompt, what happens to the messy (but important) process of creating ideas from scratch?

To explore these questions, we started by listening to children’s hopes and fears about generative AI. In conversations with 9- and 10-year-olds, children described three main ways they imagined using it:

- First, they thought about AI as an advisor to provide guidance when adults are unavailable.

- Second, children wanted AI as a collaborator to extend their creative and recreational activities.

- Finally, they saw AI as a machine to manage or complete simple routine work.

For example, Clara (age 9) imagined generative AI assisting in songwriting, “My AI helper would make songwriting easier by suggesting relevant adjectives, lyrics, sounds, etc. It would come up with a tune to go with the lyrics whenever inspiration strikes me.” Similarly, Roe (age 10) shared, “When I have an audition or a play that I have to memorize lines for I have no one to practice my lines with. It would be so much easier and would be more fun when the AI can play the other character in the script. It will help me get more emotions and won’t have to worry about other’s lines.”

as a “homework helper”

At the same time, children shared their fears that relying on generative AI for answers could make their own problem-solving skills weaker. This fear was especially evident when children discussed using it for completing homework. As Ava (age 9) stated, “Your homework won’t have any wrong answers, but if the AI did your homework for you, then you won’t get any practice.”

Children also thought that answer-generation without guidance could hinder learning. For example, Tessa (age 9) cautioned, “If you don’t do it yourself [and] if [AI] doesn’t explain what it’s doing, you don’t learn, and you fall behind.” These reflections highlight that children recognize the importance of productive struggle in learning rather than simply receiving correct answers from generative AI.

Still, much of these conversations showed generative AI as something magical. Like a mystical tool that seemed to know everything and always have the right answer. That sense of wonder is important. It reminds us that children approach new technologies with excitement. The challenge, then, as parents, educators and researchers is to nurture children’s curiosity while helping them keep a critical, questioning mindset.

That’s the spirit in which we’ve been developing tools for children to explore generative AI. In our work at KidsTeam UW, we’ve built systems that invite kids to tinker, test, and talk about how AI works. Children get a chance to peek inside and play with its inner workings. When children interact with these tools, we’ve noticed that their questions become sharper and their conversations richer. They start moving from “AI can do anything” to “Here’s how it works, here’s what it’s good at, and here’s what it still gets wrong.”

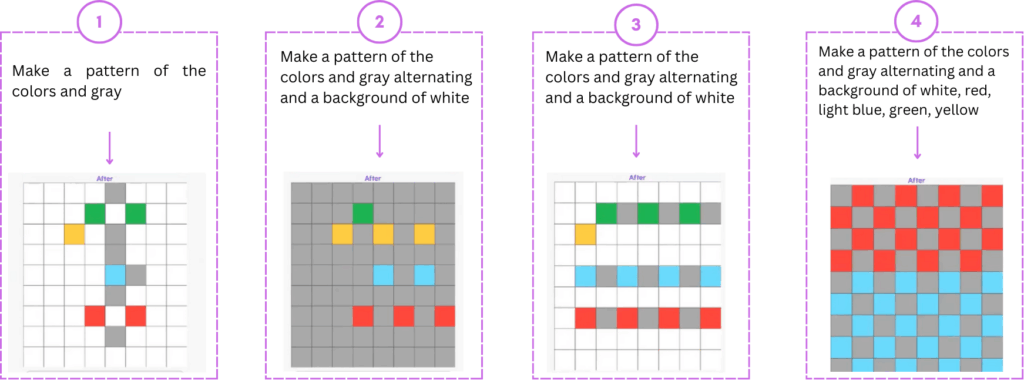

One example of such a system is AI Puzzlers, an interactive game we co-designed with children. AI Puzzlers is built around the Abstraction and Reasoning Corpus (ARC). These are visual puzzles designed to test the kind of flexible reasoning humans are great at, but that generative AI still finds challenging.

Here’s how AI Puzzlers works:

You see a few examples of “before and after” grids.

Your job is to figure out the hidden rule that transforms the “before” grid into the “after” grid, and then apply that rule to new, unseen grids.

To see what this looks like in practice, check out our short walkthrough video.

When we played AI Puzzlers with children ages 6 -12, they approached the puzzles with confidence (Figure 2). The kids noticed the patterns quickly and assumed generative AI would solve the puzzle the same way they did . That confidence made the AI’s mistakes all the more surprising to them. We noticed that laughter and disbelief soon gave way to curiosity. Children tried to understand how a tool that was so fast and seemed so capable could stumble on something they found so simple. Children began asking the AI to explain its reasoning, only to discover that AI explanations often didn’t match its solution, or that it couldn’t explain them at all.

Here’s a short video of children solving AI Puzzlers together. Notice how they test their ideas, compare strategies, and react to the AI’s mistakes.

Building on that curiosity, the children then shifted into problem-solving mode. They guided the AI by pointing out its errors, offering corrective hints, testing those hints, and refining their instructions in response to its outputs. Some paired their directions with visual descriptions; others experimented with making their instructions more precise so the AI could follow them. Even with this careful guidance, the AI often made mistakes (Figure 3). But instead of sweeping those errors under the rug, children brought them into the open. Each surprising or incorrect response became a moment to pause and ask: Why might it have done that? What patterns is it drawing on? How is it different from how I solve this puzzle?

These moments weren’t just about fixing the AI’s mistakes, they were about helping children see AI outputs as something they could question, probe, and reshape. By making generative AI’s missteps visible and talkable, children could build richer mental models of generative AI’s strengths and limitations. They learned to see that their role wasn’t just to blindly accept AI’s answers.

As generative AI becomes part of children’s everyday worlds, it’s worth exploring how we might give them experiences that invite tinkering, not just with what AI can do, but with the ways it sometimes falls short. When children can see inside the black box, poke at the black box, and reshape their interactions, they learn something far more valuable than a correct answer. They learn how to think critically, persist through uncertainty, and approach problems with curiosity and creativity. That’s the promise and the responsibility of introducing AI into children’s lives. We can nurture their sense of wonder without letting it slide into blind trust. We can give them space to explore what’s possible with generative AI while helping them recognize what’s not. And, just as importantly, we can remind them that the most powerful kind of intelligence isn’t artificial at all, it’s the human capacity to imagine, question, and keep tinkering until something new and surprising emerges.

Dangol, A., Wolfe, R., Yoo, D., Thiruvillakkat, A., Chickadel, B., & Kientz, J. A. (2025). If anybody finds out you are in BIG TROUBLE”: Understanding Children’s Hopes, Fears, and Evaluations of Generative AI. In Proceedings of the 24th Interaction Design and Children (pp. 872-877).

Dangol, A., Zhao, R., Wolfe, R., Ramanan, T., Kientz, J. A., & Yip, J. (2025). “AI just keeps guessing”: Using ARC Puzzles to Help Children Identify Reasoning Errors in Generative AI. In Proceedings of the 24th Interaction Design and Children (pp. 444-464)

Aayushi Dangol is a doctoral candidate in Human Centered Design & Engineering at the University of Washington. Her research explores how we can design and deploy AI systems that support children’s learning while safeguarding their agency and developmental needs. She is advised by Dr. Julie Kientz and closely collaborates with Dr. Jason Yip.