When AI Gets It Wrong and What Children Can Learn From It

Generative AI (e.g., ChatGPT, Gemini) is increasingly becoming part of children’s digital lives. We are seeing a new world of personalized learning apps, AI-assisted storytelling platforms, and creative tools that can turn an idea into a song, drawing, or interactive game. On the surface, the promise is compelling. New technologies can adapt to a child’s needs, help with creative expression, and offer new learning experiences for every individual child. Yet GenAI usage also raises important questions. If an AI tool can give a polished answer right away, how do children grow and wrestle with a problem? When a story, poem, or image can be summoned from a single prompt, what happens to the messy (but important) process of creating ideas from scratch?

To explore these questions, we started by listening to children’s hopes and fears about generative AI. In conversations with 9- and 10-year-olds, children described three main ways they imagined using it:

- First, they thought about AI as an advisor to provide guidance when adults are unavailable.

- Second, children wanted AI as a collaborator to extend their creative and recreational activities.

- Finally, they saw AI as a machine to manage or complete simple routine work.

For example, Clara (age 9) imagined generative AI assisting in songwriting, “My AI helper would make songwriting easier by suggesting relevant adjectives, lyrics, sounds, etc. It would come up with a tune to go with the lyrics whenever inspiration strikes me.” Similarly, Roe (age 10) shared, “When I have an audition or a play that I have to memorize lines for I have no one to practice my lines with. It would be so much easier and would be more fun when the AI can play the other character in the script. It will help me get more emotions and won’t have to worry about other’s lines.”

Figure 1: A child’s drawing imagining AI

as a “homework helper”

At the same time, children shared their fears that relying on generative AI for answers could make their own problem-solving skills weaker. This fear was especially evident when children discussed using it for completing homework. As Ava (age 9) stated, “Your homework won’t have any wrong answers, but if the AI did your homework for you, then you won’t get any practice.”

Children also thought that answer-generation without guidance could hinder learning. For example, Tessa (age 9) cautioned, “If you don’t do it yourself [and] if [AI] doesn’t explain what it’s doing, you don’t learn, and you fall behind.” These reflections highlight that children recognize the importance of productive struggle in learning rather than simply receiving correct answers from generative AI.

Still, much of these conversations showed generative AI as something magical. Like a mystical tool that seemed to know everything and always have the right answer. That sense of wonder is important. It reminds us that children approach new technologies with excitement. The challenge, then, as parents, educators and researchers is to nurture children’s curiosity while helping them keep a critical, questioning mindset.

That’s the spirit in which we’ve been developing tools for children to explore generative AI. In our work at KidsTeam UW, we’ve built systems that invite kids to tinker, test, and talk about how AI works. Children get a chance to peek inside and play with its inner workings. When children interact with these tools, we’ve noticed that their questions become sharper and their conversations richer. They start moving from “AI can do anything” to “Here’s how it works, here’s what it’s good at, and here’s what it still gets wrong.”

One example of such a system is AI Puzzlers, an interactive game we co-designed with children. AI Puzzlers is built around the Abstraction and Reasoning Corpus (ARC). These are visual puzzles designed to test the kind of flexible reasoning humans are great at, but that generative AI still finds challenging.

Here’s how AI Puzzlers works:

You see a few examples of “before and after” grids.

Your job is to figure out the hidden rule that transforms the “before” grid into the “after” grid, and then apply that rule to new, unseen grids.

To see what this looks like in practice, check out our short walkthrough video.

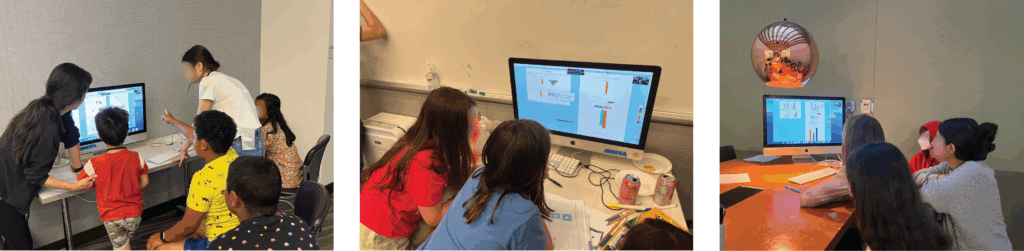

When we played AI Puzzlers with children ages 6 -12, they approached the puzzles with confidence (Figure 2). The kids noticed the patterns quickly and assumed generative AI would solve the puzzle the same way they did . That confidence made the AI’s mistakes all the more surprising to them. We noticed that laughter and disbelief soon gave way to curiosity. Children tried to understand how a tool that was so fast and seemed so capable could stumble on something they found so simple. Children began asking the AI to explain its reasoning, only to discover that AI explanations often didn’t match its solution, or that it couldn’t explain them at all.

Figure 2: Children engaging with AI Puzzlers during KidsTeam UW

Here’s a short video of children solving AI Puzzlers together. Notice how they test their ideas, compare strategies, and react to the AI’s mistakes.

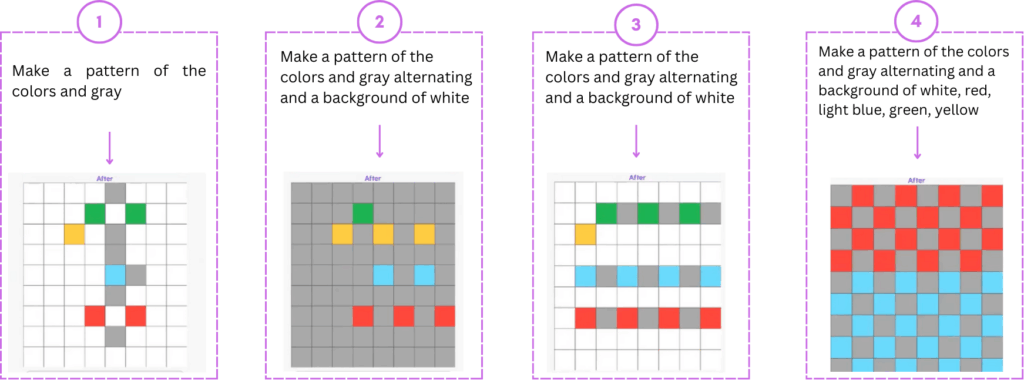

Building on that curiosity, the children then shifted into problem-solving mode. They guided the AI by pointing out its errors, offering corrective hints, testing those hints, and refining their instructions in response to its outputs. Some paired their directions with visual descriptions; others experimented with making their instructions more precise so the AI could follow them. Even with this careful guidance, the AI often made mistakes (Figure 3). But instead of sweeping those errors under the rug, children brought them into the open. Each surprising or incorrect response became a moment to pause and ask: Why might it have done that? What patterns is it drawing on? How is it different from how I solve this puzzle?

Figure 3: Children iteratively refined their instructions to guide the AI towards solving the puzzle. The sequence showcases the increasing specificity in their hints and AI’s corresponding outputs.

These moments weren’t just about fixing the AI’s mistakes, they were about helping children see AI outputs as something they could question, probe, and reshape. By making generative AI’s missteps visible and talkable, children could build richer mental models of generative AI’s strengths and limitations. They learned to see that their role wasn’t just to blindly accept AI’s answers.

As generative AI becomes part of children’s everyday worlds, it’s worth exploring how we might give them experiences that invite tinkering, not just with what AI can do, but with the ways it sometimes falls short. When children can see inside the black box, poke at the black box, and reshape their interactions, they learn something far more valuable than a correct answer. They learn how to think critically, persist through uncertainty, and approach problems with curiosity and creativity. That’s the promise and the responsibility of introducing AI into children’s lives. We can nurture their sense of wonder without letting it slide into blind trust. We can give them space to explore what’s possible with generative AI while helping them recognize what’s not. And, just as importantly, we can remind them that the most powerful kind of intelligence isn’t artificial at all, it’s the human capacity to imagine, question, and keep tinkering until something new and surprising emerges.

Dangol, A., Wolfe, R., Yoo, D., Thiruvillakkat, A., Chickadel, B., & Kientz, J. A. (2025). If anybody finds out you are in BIG TROUBLE”: Understanding Children’s Hopes, Fears, and Evaluations of Generative AI. In Proceedings of the 24th Interaction Design and Children (pp. 872-877).

Dangol, A., Zhao, R., Wolfe, R., Ramanan, T., Kientz, J. A., & Yip, J. (2025). “AI just keeps guessing”: Using ARC Puzzles to Help Children Identify Reasoning Errors in Generative AI. In Proceedings of the 24th Interaction Design and Children (pp. 444-464)

Aayushi Dangol is a doctoral candidate in Human Centered Design & Engineering at the University of Washington. Her research explores how we can design and deploy AI systems that support children’s learning while safeguarding their agency and developmental needs. She is advised by Dr. Julie Kientz and closely collaborates with Dr. Jason Yip.

Aayushi Dangol is a doctoral candidate in Human Centered Design & Engineering at the University of Washington. Her research explores how we can design and deploy AI systems that support children’s learning while safeguarding their agency and developmental needs. She is advised by Dr. Julie Kientz and closely collaborates with Dr. Jason Yip.

Announcing the 2026 Well-Being by Design Fellowship: Empowering Designers to Help Children Thrive

Over the past two years, we’ve had the privilege of supporting two incredible cohorts of Well-Being by Design Fellows—talented designers reimagining digital experiences that capture children’s attention while centering their well-being. In today’s rapidly evolving digital landscape, this mission is more important than ever.

Applications are now open for the 2026 Fellowship, generously supported by Pinterest.

The fellowship runs for five months, beginning with an in-person kickoff at Sesame Workshop’s headquarters in New York City. Fellows will then take part in a series of interactive virtual sessions, concluding with a public webinar to share their insights with the broader community.

Who Should Apply

We’re seeking mid-career designers currently working on interactive children’s technology or media products. If you’re passionate about embedding well-being principles from the early stages of design, this fellowship is for you.

Why This Matters

The need for thoughtfully designed digital content for children has never been more urgent. With seismic changes unfolding in technology, including the rapid development and deployment of AI, we must keep humans—especially children—at the heart of design.

As Mitch Resnick recently wrote in Medium, we need to expand opportunities for children to develop their most human abilities: to grow as “creative, curious, caring, and collaborative learners.”

That’s why we want to support promising innovations that build on the principles championed by pioneers like Joan Ganz Cooney—innovations that combine equity with bold creativity to ensure that every child, regardless of background, has access to inspiring, developmentally sound digital experiences that spark curiosity, fuel learning, and nurture their full potential.

Designers are uniquely positioned to lead this charge. Through the Well-Being by Design Fellowship, we provide the tools, frameworks, and support designers need to create meaningful, engaging, and enriching experiences for children.

Building a Community of Practice

We recognize that designing for children’s well-being is complex work. That’s why the Fellowship not only offers evidence-based resources and expert feedback, but also brings in direct input from young people through our youth design team. Just as importantly, it connects Fellows to a supportive network of peers who share their commitment to creating positive digital experiences for kids. Alumni often say this community has both strengthened their projects and enhanced their own well-being.

Apply Now

The application deadline for the 2026 Fellowship program is October 13, 2025.

Help us spread the word about this unique opportunity to innovate, amplify young voices, and shape digital experiences that help children thrive. Learn more and apply here.

Hoot Reading: From Playful Beginnings to High-Impact Literacy Edtech

For nearly two decades, edtech entrepreneur Carly Shuler has focused on how technology can best support and amplify the human connections at the heart of teaching and learning.

Carly Shuler with Cooney Center Fellows Glenda Revelle and Gabrielle Cayton-Hodges in 2010

This mission drove her work as the Cooney Center’s inaugural fellow in 2007, and it remains central to her efforts as the Co-CEO and co-founder of Hoot Reading, a one-on-one online literacy tutoring platform enrolling thousands of students every year. Shuler shared a critical lesson she learned during her time at the Cooney Center that’s endured through the rapid changes in technology and her own evolving business model:

“You have to be very intentional when designing technology for children, because most of the technology that ends up in the hands of kids is not meant for human connection,” she said. “You can’t assume that it’s going to happen. You really have to build for it.”

Bridging Generations

Early in her career, Shuler was a brand manager at a major toy and children’s digital entertainment company. She left to pursue a master’s degree in education technology at Harvard’s Graduate School of Education and graduated the same year that Sesame Workshop established the Joan Ganz Cooney Center as an independent research and innovation lab for digital media.

“I felt like there was a big gap between the people making products for kids and the people who really understood child development, education, and psychology. I knew that Sesame was a leader in educating children through informal media,” said Shuler. “Working there really was my dream job.”

After a year-long fellowship at the Cooney Center, Shuler stayed four additional years on staff. One of the projects she worked on was Family Story Play, a collaboration between the Cooney Center and the research arm of the telecom company Nokia. Video calls were just going mainstream at the time, and the project team hoped they could use the technology to link grandparents with grandkids for shared reading time.

From a literacy standpoint, the goal was “dialogic reading”—the interactive, conversational mode of reading between adults and children that sparks engagement in stories and motivation to keep reading. Video calls could presumably give families more opportunities to benefit from this shared reading experience. However, it soon became apparent that behaviors that came naturally to grandparents and young kids reading together in person faltered when mediated by distance and a screen.

According to Miles Ludwig, former vice president of digital media for Sesame Workshop, neither grandparents nor early readers were the ideal demographic for video call interfaces, which were too technical and too abstract for the sustained engagement of dialogic reading.

Story Visit facilitated video calls between adults and children with Sesame Street characters.

“This work was really ahead of its time, because it recognized something we all learned during the pandemic, which was just how challenging video calls can be, especially with younger children,” Ludwig noted.

“Young kids are not going to just sit there and have a conversation with a grandparent. They’re going to hit that big red hangup button in 30 seconds,” said Shuler. “The challenge was to make this experience into something more interactive, less like a conversation and more like play.”

Their solution was Elmo. The beloved Sesame character would play the role of intermediary, sharing the screen with the humans in the Family Story Play product and scaffolding interactions by initiating the reading sessions, suggesting books, and modeling the playful reactions and questions about the unfolding story as the children and adults read together.

The prototype product was a tablet-like device that held physical books with a sensor keeping track of each page as they were read. According to a 2010 pilot study, both the quantity and quality of co-reading engagement improved with Family Story Play compared to co-reading sessions over a normal video call.

When Nokia closed its independent research arm in 2014, the company offered small grants to researchers who wanted to continue their projects. Tico Ballagas, a research scientist at Nokia who had been developing Family Story Play, convinced Shuler to co-found a company that would transform the physical product into an app called Kindoma (a mashup of the German words kind for kid and oma for grandmother).

Kindoma

Maintaining the human connection while going completely digital required yet another piece of intentional design—on-screen pointer fingers (color-coded for each reader) that kids and grandparents could move around as they read.

“You have to have that magic finger to point out specific words or objects on the screen,” said Shuler. “That shared touch is such an important part of the back and forth reading experience between adult and child”

While Kindoma was well received and used by tens of thousands of families, according to Shuler, “we were never able to crack the nut on the business model. We couldn’t make enough money from the app to raise proper venture capital.”

In 2016, Ballagas left the company for a new job, “and that left me with this incredible product but no clear idea what to do with it.”

Bridging Students and Teachers

The spark for Hoot Reading was lit over a lunch date between Shuler and her good friend, Maya Kotecha. Kotecha confided that her young daughter was having early difficulties with reading, and Shuler talked about Kindoma and the struggle to create a viable business targeting grandparents and parents.

“Together we decided, let’s try the app in a teaching and learning scenario,” Shuler recalled. Over the summer, they organized a pilot by connecting families in their network who wanted reading help for their kids with a couple of teachers they hired to tutor using the Kindoma app.

“I’ve never seen something that resonated so strongly, when it was really just a scrappy pilot,” said Shuler. “When the pilot ended, the parents were like, please keep it going.”

The Hoot Reading platform connects children with live tutors to read books together.

And so they did. Hoot Reading was born in 2018. Compared to fostering informal reading sessions with extended family members, connecting students with educators presented an entirely new challenge. The magic finger stayed in the new product, but the reading material had to change. Shuler and Kotecha needed to swap out the library of licensed storybooks with decodable books aligned with literacy standards.

“We couldn’t find high-quality decodables that were suitable for our needs and online use, so we wrote our own, more than 300 books that are used for our instruction,” said Shuler. They also needed to build the backend technology to automate the matching of students and tutors, scheduling sessions, and progress reporting.

“At first, we were scheduling kids with tutors on a whiteboard,” Shuler recalled. “Obviously, you can’t do that for very long. It’s not scalable.”

The need for effective virtual learning at scale came to the fore in early 2020 when schools closed at the height of the COVID pandemic, and in its aftermath when educators confronted widespread COVID learning loss. Studies have shown that one of the most effective interventions to mitigate COVID learning loss was “high-impact tutoring,” (HIT) which is typically defined as small groups of students (no more than four) who meet with a trained tutor at least three times a week. But intensive, in-person tutoring is expensive. There aren’t enough properly trained tutors, nor enough funding in most school districts to provide the service to anywhere near the number of students who need it. Many have looked to technology for help, including programs like Hoot Reading.

Carly Shuler talks about the Family Story Play project to the Hoot team.

An early evaluation of Hoot by literacy specialist Dr. Susan Neuman, a professor of childhood and literacy education at New York University, found that adding twice weekly, 30-minute Hoot sessions to the in-person tutoring of a summer reading program boosted gains in word reading fluency by 86 percent and oral reading fluency by 152 percent. More recently, Hoot partnered with the National Student Support Accelerator at Stanford University, which conducted a randomized control trial of Hoot Reading during the 2024-25 school year, targeting 1,400 elementary school students in the Kansas City Public Schools who were reading below grade level. Early findings of the ongoing study show that Hoot students significantly outperformed control-group students on the I-Ready assessment, and that the effect size is larger for students who were designated as requiring Tier 3 intervention. Hoot students demonstrated both higher i-Ready typical growth scores than control-group students, as well as higher stretch growth scores than control-group students, meaning they are closer to achieving grade-level proficiency.

In addition to rigorous efficacy research, Hoot Reading has also earned the Tutoring Design Badge from the National Student Support Accelerator at Stanford University, demonstrating alignment with research-based program design practices. NSSA acknowledged that Hoot’s Tutoring Program excelled particularly in the areas of High-Quality Instructional Materials, Program Effectiveness, Tutor Selection, and Data Privacy.

One of the most significant developments for digital tutoring occurred in November 2022 when ChatGPT’s launch demonstrated the conversational prowess and lightning-fast knowledge retrieval of generative artificial intelligence. Both the hype and the headlines have since swirled around the potential for AI to tutor students directly. But Shuler and most experts in the field say the human connections between teachers and learners is too valuable to sacrifice. They say a far more effective use of AI is to support and expand the reach of human tutors, rather than replace them.

For instance, AI could be used to make personalized recommendations for the dosage of tutoring needed by different students instead of giving every student the same three sessions a week. The technology could also help review and analyze human tutoring sessions, to spot patterns where instruction is more or less effective.

“Quality assurance becomes so important, and increasingly difficult, as we scale,” said Shuler. “At Hoot, we’re not going to use an AI to tutor the student, but rather we will use AI to tutor the tutor and to tell us as an organization if there’s a teacher who needs more coaching.” AI works in the background, helping tutors assess exactly what a child needs to be working on and suggesting resources to address specific gaps in learning. “So instead of continuously assessing the student, the tutor is free to do what they do best,” Shuler said, “which is teaching and connecting with the students.”

Reimagining AI Through the Lens of Children’s Well-Being

This blog post was originally published by foundry10 and appears here with permission.

Researcher Stefania Druga, showcasing ChemBuddy, a multimodal AI chemistry assistant she developed.

On a late June morning at Reykjavík University in Iceland, researchers, educators, clinicians, and designers from all over the world convened for the the 24th annual ACM Interaction Design and Children (IDC) Conference to share the latest research findings, innovative methodologies, and new technologies in the areas of inclusive child-centered design, learning and interaction.

foundry10 Senior Researcher Jennifer Rubin and Technology, Media, and Information Literacy Team Lead Riddhi Divanji were excited to co-lead a hybrid workshop this year at the conference on “Designing AI for Children’s Well-Being.” Participants in the workshop applied from a wide range of fields and industries to share their ideas and learn from others.

“Educators, designers, researchers, clinicians, and caregivers/parents have had the difficult task of needing to be reactive and responsive to a powerful technology that is ubiquitously available to youth, but not designed with safeguards for child development and well-being in mind. This workshop brought all of these stakeholders together to build a shared language around designing AI with children’s well-being at the center. The energy and openness in the room made it clear: people are ready to move from conversation to action,” said Divanji.

The workshop was co-organized alongside Rotem Landesman (University of Washington), Medha Tare (Joan Ganz Cooney Center at Sesame Workshop), and Azadeh Jamalian (The GIANT Room). Together, they guided participants through discussions and activities focused on The Responsible Innovation in Technology for Children (RITEC) Toolbox, a research-based guide and framework created by UNICEF and the LEGO Group for designers to build more thoughtful, child-centered digital experiences.

How to Design for Children’s Well-Being in Digital Play

The RITEC project was developed in response to conversations with children ages 8-12 from around the world. The framework maps how the design of children’s digital experiences affects their well-being and provides guidance as to how informed design choices can promote positive well-being. It also identifies eight dimensions of children’s subjective well-being that well-designed digital technologies should support.

Using this framework as a guide for their conversation, 15 IDC workshop participants discussed their ideas, projects, and proposals for how AI can be designed and used to promote children’s well-being.

“I was struck by how applicable the RITEC framework is to a wide variety of products and solutions. I had gone in wondering how easily we would be able to adapt, and I found that not only were people able to choose other products but also other applications (e.g., evaluation instead of design),” said IDC workshop participant Gillian Hayes, Professor at University of California, Irvine.

Learn more about some of the proposals, ideas, and research projects workshop participants discussed below.

Opening AI Spaces for Youth Mental Health Support

Jocelyn Skillman, a licensed therapist who participated in the workshop, shared a prototype of ShadowBox—a trauma-informed AI companion designed to hold space for youth navigating violent ideation or overwhelming inner experiences.

“It is not a therapeutic substitute or crisis tool, but a proposed experiment in relational design: an attempt to create a slow, steady digital presence that can model containment, warmth, and emotional pacing,” said Skillman.

ShadowBox stems from Skillman’s clinical work with children and adolescents who experience intrusive or violent thoughts. Many individuals with homicidal or suicidal ideation fear the consequences of disclosure—believing they will be hospitalized, punished, or misunderstood.

“In traditional American care systems, these fears are often justified—violent ideation is treated as inherently dangerous, rather than as a signal of underlying distress,” said Skillman.

Though she sees the risks inherent in a relational AI tool, she can also imagine the benefits of an anonymous, low-stakes space for youth to “experiment with language, regulate affect, and feel witnessed—without fear of surveillance, diagnosis, or moral panic.”

Skillman says the most impactful part of the workshop for her was, “learning about all the resources and research from other participants. I will continue to think about the tension between AI as an ally and resource versus a threat to youth’s development.”

Insights on Teaching AI and Digital Literacy Skills in Middle School Classrooms

Workshop participant Anna Baldi, a middle school technology teacher at The Evergreen School in Shoreline, WA, integrates digital literacy skills with hands-on learning in video production, graphic design, robotics, and programming. This year, she partnered with a social studies teacher to introduce AI tools into the 8th grade curriculum, focusing on prompt generation, and editing and fact-checking AI-generated content, which led to meaningful discussions on the pros and cons of these tools.

“Speaking with students, I have observed the consequences of technology in their lives, including plagiarism, cyberbullying, and social media addiction. However, I’ve also seen students create impressive multimedia projects and engage in thoughtful conversations about AI’s role in their education,” shared Baldi.

In Baldi’s experience, the classroom offers an ideal space for students to explore AI technologies under the guidance of informed educators and caregivers.

“In many ways, technology breaks down the barrier between home and school, so a three-way partnership between teachers, youth, and caregivers is essential for a unified and informed approach to AI tools,” said Baldi.

Baldi sees AI as a valuable tool when used to support personalized learning and foster students’ creativity, autonomy, and emotional growth. When asked how she would apply what she learned in the workshop to her own work in the classroom, Baldi said:

“As an educator I was really interested in the idea that children need to perceive their own learning. It’s made me consider how much my students’ skill levels and improvement are visible to them, and how I can help them see their personal growth throughout the learning process rather than just at the end.”

Designing AI Tools and Guidance to Prioritize Well-Being

AI is showing up in more and more everyday products, including those made for young children ages two to six. As these tools become part of family life, it’s important to make sure they support healthy child development and youth well-being. Right now, there’s a gap between what researchers know and what product designers need in order to build youth-friendly AI.

To help close that gap, the Digital Wellness Lab at Boston Children’s Hospital is leading a multi-phase research project reviewing current studies and gathering expert input to create a practical, easy-to-use guide for designers.

According to IDC workshop participant Brinleigh Murphy-Reuter, a Program Administrator at the Digital Wellness Lab, preschoolers may benefit from personalized learning experiences with familiar characters that can respond like real friends. But some young children are also forming bonds with AI itself—like smart speakers or robot toys—which we don’t fully understand yet. As AI technology rapidly evolves, it’s crucial to learn how to design products that support children’s well-being and development.

Research is still in progress, but preliminary findings show that understanding the emotional impact and formation of relationships between early learners and AI-driven characters is an under-explored research area.

“By studying the importance of AI as a tool, not a replacement for human interaction for young users, researchers can play an important role in bridging the gap between research and industry implementation,” said Murphy-Reuter.

Stefania Druga, an independent researcher focused on novel, multimodal AI applications, also highlighted the importance of prioritizing youth well-being when designing AI tools with her project, ChemBuddy. This tool acts as an AI tutor or “lab partner” for middle-school chemistry students. It incorporates a variety of tangible sensors and interfaces that students can interact with to get answers to questions or extra guidance in real-time.

ChemBuddy helps reduce cognitive load and common frustrations that can occur when students learn about abstract concepts. It makes learning concrete through hands-on activities and uses Socratic dialogue to guide students toward more accurate understandings of concepts if it detects a misconception, instead of just providing direct answers.

This personalized support encourages students to articulate their thoughts and build their own understandings, promoting deeper learning, intellectual agency, and enhanced student well-being in learning environments.

The Future of Designing AI for Children’s Well-Being

The “Designing AI for Children’s Well-Being” workshop highlighted just how essential it is to create space for collaboration across disciplines when developing technology for young people. By grounding conversations in the RITEC framework and surfacing insights from educators, clinicians, designers, and researchers alike, participants reaffirmed the importance of designing AI not just for profit, but with children’s emotions, safety, and autonomy in mind.

“I think the workshop did a brilliant job of drawing out how AI needs to be ethically aligned with how children grow, play, and make meaning. This mirrors wider ethical concerns around transparency, power asymmetries, and consent, but it’s even more urgent with child-centered AI, because children’s developmental trajectories can alter depending on the systems they interact with,” said IDC workshop participant, Dr. Nomisha Kurian, Assistant Professor in the Department of Education Studies at University of Warwick.

As AI continues to shape children’s day-to-day experiences, this workshop served as both a call to action and a reminder: centering children’s voices and well-being must remain at the heart of innovation.

“Our greatest responsibility is not to the algorithms we create, but to the children who will inherit the world we build with them.” —workshop participant Cristine Legare, Professor of Psychology at the University of Texas at Austin.

Learn more about the Digital Technologies and Education Lab and the Technology, Media, and Information Literacy Team at foundry10.